SAP BTP Kyma for an Angular, .NET and PostgreSQL Project

Table of Contents

- Deploying an Angular/.NET App to SAP BTP Kyma

- PREREQUISITES

- THE APP

- SAP BTP KYMA

- SAP

- BTP

- BTP Cockpit

- Kyma

- SAP BTP Trial Account

- DEPLOYMENT

- 1. Login to BTP Cockpit

- 2. Provision a Kyma Environment

- 3. Create Namespace on Kyma

- 4. Access Kyma From Dev machine

- 4.1 Create Access Token

- 4.2 Set Kubeconfig file as Default

- 4.3 Admin Access

- 5. Database

- 5.0 Describe the Files

- 5.1 Deployment

- 5.2 Data

- 6. Backend

- 6.1 Create Docker Image

- 6.2 Push Docker Image To Docker Hub

- 6.3 Create Deployment

- 6.4 Create Service

- 6.5 Create Ingress Service

- 7. Frontend

- 7.0 Project Configuration

- 7.1 Create Docker Image

- 7.2 Push Docker Image

- 7.3 Create Deployment

- 7.4 Create Service

- 7.5 Update Ingress Service

- CONCLUSION

Deploying an Angular/.NET App to SAP BTP Kyma

In this blog post/tutorial, we will be deploying an Angular frontend with a .Net backend that is connected to a PostgreSQL database to SAP BTP Kyma environment. It can be considered an intermediate to advanced tutorial.

This is for a recent study to see if we can run our project on BTP Kyma and for all intents and purposes, it was a success. In its heart, Kyma is a kubernetes system, and in the background, it is running on other hyperscalers, such as AWS and Azure, so there is no reason it shouldn't work.

We will see what kind of advantages running our project on Kyma will provide instead of just running it on AWS. Easier integration with SAP Public or Private Cloud? Fast-tracking to SAP Marketplace listings?

Warning!!! This will probably be a lengthy tutorial.

As youtubers would say, lets get into it.

PREREQUISITES

There are a couple of prerequisites.

-

SAP Universal ID:

This also has some prerequisites:

- An email address

- A phone number

-

An Angular/.Net project with PostgreSQL database

This is what we will be deploying in this tutorial. But ofcourse, you can use the tutorial to get a general idea of how to deploy "anything" to Kyma.

Because my example is for an ongoing internal project, i wont be able to share the code.

-

Docker

I will assume that you know how to write a dockerfile or it already exists for your projects.

We also need docker installed on the local machine to create docker images and upload them to docker hub.

-

Dockerhub Accout

We will be uploading our docker images to dockerhub. This is free and you can create an account with just an email.

-

Kubectl

We need kubectl installed on the local machine.

THE APP

The app is named Ocean, our currently under development MES software.

For frontend, we are using Angular.

For backend, we are using .Net.

And for database, we are using a PostgreSQL.

SAP BTP KYMA

Now, let's talk a little bit about SAP, BTP and Kyma.

SAP

In my previous life, I was an ABAP developer. So, i think i know a thing or two about SAP. SAP is mostly known for its ERP Software, actually, it is what most people assume when you say SAP. But i think, SAP want to change that notion and be known as a software solution provider, not just an ERP software developer. And i think they are moving in that direction.

BTP

BTP stands for "Business Technology Platform", and its all the rage right now. It is an umbrella term for SAP's cloud solutions.

For this tutorials purposes, our only interest with BTP is the BTP Cockpit.

BTP Cockpit

We will be using BTP Cockpit to provision ourselves a Kyma cluster and move straight to our Kyma Dashboard.

Kyma

Kyma is a managed cloud kubernetes service.

SAP BTP Trial Account

???

DEPLOYMENT

This is where the actual tutorial begins.

To summarize what we will do:

- We will prepare our Kyma environment for our project.

- We will prepare our docker images for frontend, backend and database projects and push them to docker hub

- We will create deployments for each of these projects on kyma.

- We will create services for each of these projects on kyma.

- We will create a load balancer service to open the db to internet.

- We will create an ingress service for routing operations between the frontend and the backend.

We will begin by provisioning our kyma environment from the btp cockpit. Then we will create a namespace and deploy our database. We will encounter problems on the way and to solve them, we will switch back to configuring our kyma environment. The rest of the tutorial will proceed like that; we will do some deployment, encounter some problem and solve it.

Let's begin.

1. Login to BTP Cockpit

2. Provision a Kyma Environment

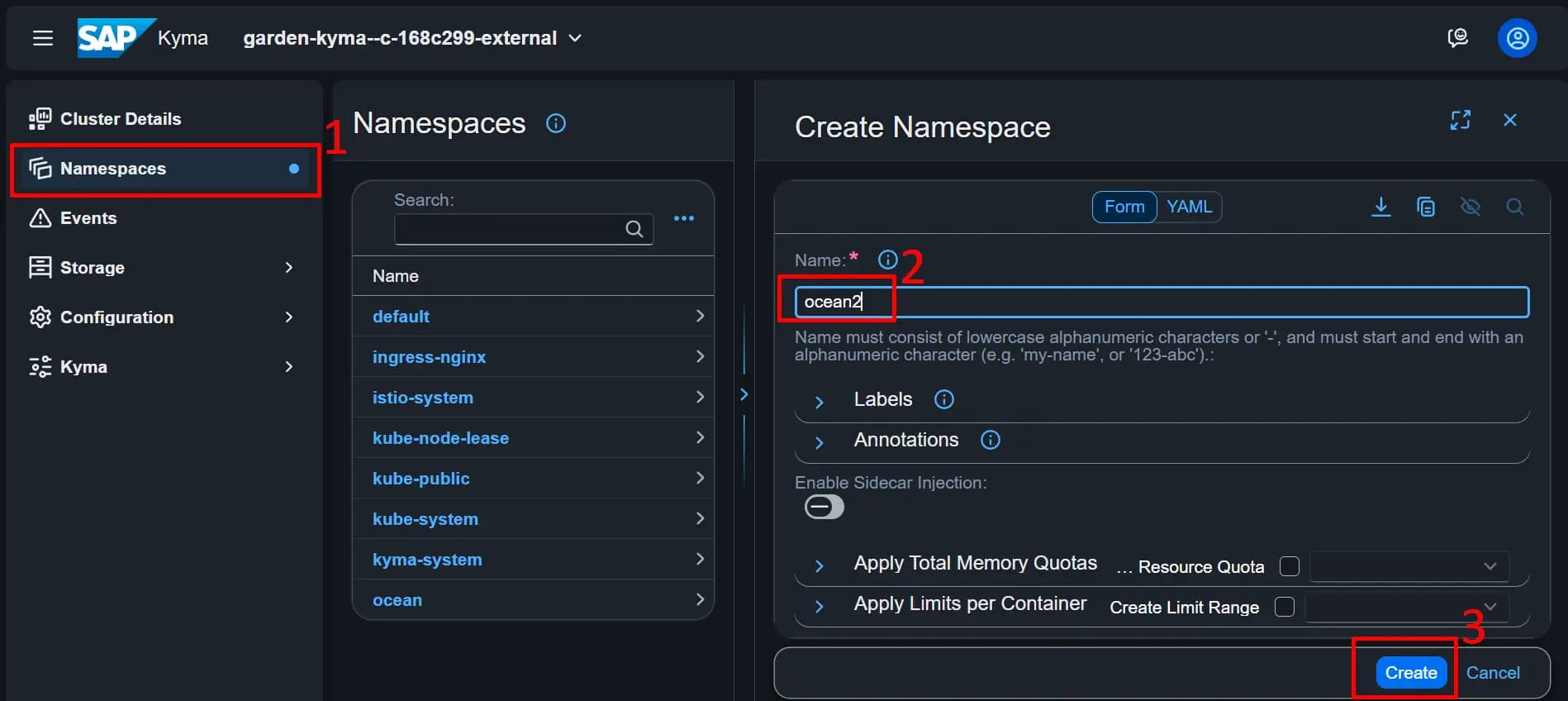

3. Create Namespace on Kyma

4. Access Kyma From Dev machine

4.1 Create Access Token

First we will go inside the newly created namespace.

Remember where you downloaded the file, we will use it in a minute.

4.2 Set Kubeconfig file as Default

If we dont set the config file we created as default, we would need to call it every time we call a kubectl command.

It becomes a chore immediately.

Instead, we will set it as default for this command line session.

Go the directory you downloaded the file and run the following commands. (I will assume you downloaded it to your home directory)

Windows

$env:KUBECONFIG = "~/ocean2.yaml"

kubectl config current-contextAnd as resonse, we will get:

ocean2-tokenGreat, ocean2.yaml Kubeconfig is the default kubectl configuration.

Let's test accessing kyma now:

kubectl get podsError from server (Forbidden): pods is forbidden: User "system:serviceaccount:ocean2:ocean2" cannot list resource "pods" in API group "" in the namespace "ocean2"Good new and bad news.

Looks like we can reach kyma now, but can't access anything in it yet. We will need some privileges to access and/or create resources on kyma.

4.3 Admin Access

Let's not beat around the bush and just get admin access for ocean2 service account.

There is probably a minimalistic role that just allows access to what we need, but right now, I don't know what that is, and don't want to spend time researching that, hence the admin role.

First, let's go back to our cluster view.

This is the role that we are going to use.

Next, we will go into Cluster Role Bindings and create a new role binding.

Fill in the details. Notice that we chose Service Account as the kind of role we are creating and as the service account itself, we chose the namespace and the service account under it that we created in the previous section. Click Create and we are done. Let's test it.

Now, run that command once again.

kubectl get podsNo resources found in ocean2 namespace.Great, we have access. :)

The response is correct because, we have not created anything yet. Let's rectify that.

5. Database

I want to deploy the database first, because our backend simply does not work without an active database connection. It literally does not start.

We can blame the architect. ( Hint: it is me. )

We could have gone with the frontend first, as it does not need the backend to run, but we would not be able to do anything with it, such as login etc, so, it is kind of pointless.

5.0 Describe the Files

There is this very detailed tutorial on Digital Ocean about deploying a PostgreSQL(pg) server to a kubernetes cluster.

Here is the link:

How to Deploy Postgres to Kubernetes Cluster

You can check the tutorial itself, but in summary, we need a couple of files in order to start our pg server.

- Create a Configmap:

This will include the environment variables and secrets for our pg server.

For example:

--------------------------------------------------

postgres-configmap.yaml

--------------------------------------------------

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-secret

labels:

app: postgres

data:

POSTGRES_DB: <db_name>

POSTGRES_USER: <db_user>

POSTGRES_PASSWORD: <password>- Create Persistent Volume

We will need a persistent volume, in case we need to restart, add or delete our pg pods on k8s. Otherwise, we would lose all of our data all the time.

Here is the file:

--------------------------------------------------

psql-pv.yaml

--------------------------------------------------

apiVersion: v1

kind: PersistentVolume

metadata:

name: postgres-volume

labels:

type: local

app: postgres

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data/postgresqlYou can see I assigned 1 Gb of storage to the server. It is adequate for my use case, but you can adjust it however you see fit. If I remember correctly, 10Gi is the default.

- Create a Persistent Volume Claim

We created a persistent volume, and now we need a claim for our postgres to access that volume.

We can imagine this as giving permission to our pg server to access the storage, at least, thats how I imagine it. The full technical details might be a little more complex.

--------------------------------------------------

psql-claim.yaml

--------------------------------------------------

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-volume-claim

labels:

app: postgres

spec:

storageClassName: manual

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi- Create a Deployment

This is the step we create the PostgreSQL Server itself.

Here is the file:

--------------------------------------------------

ps-deployment.yaml

--------------------------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: "postgres:14"

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5432

envFrom:

- configMapRef:

name: postgres-secret

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: postgresdata

volumes:

- name: postgresdata

persistentVolumeClaim:

claimName: postgres-volume-claimYou can see the image we are using to create the deployment.

You can also see the configmap, the volume and the claim we will use to access that volume.

- Create a Service

Now that we have performed a pg deployment, we need to expose that deployment using a service.

--------------------------------------------------

ps-service.yaml

--------------------------------------------------

apiVersion: v1

kind: Service

metadata:

name: postgres

namespace: ocean2

labels:

app: postgres

spec:

type: LoadBalancer

ports:

- port: 5432

targetPort: 5432

selector:

app: postgresNow that we have all the files, we would need, we can proceed to creating the pg server.

5.1 Deployment

Here is how I did the deployment.

I created a folder inside my project called db, right next to frontend and backend folders. Then, I created each of these files inside the db folder.

ocean_backup.sql is the odd one out, but we will come back to that in a couple of minutes.

Let's run them one by one.

From the command line, go to the db folder. Then;

- Create Config Map:

kubectl apply -f postgres-configmap.yaml

> configmap/postgres-secret created

kubectl get configmap

> NAME DATA AGE

> istio-ca-root-cert 1 3d3h

> kube-root-ca.crt 1 3d3h

> postgres-secret 3 98s- Create Persistent Volume

kubectl apply -f psql-pv.yaml

> persistentvolume/postgres-volume2 created

kubectl get pv

> NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

> postgres-volume2 1Gi RWX Retain Available manual <unset> 36s

- Create Persistent Volume Claim

kubectl apply -f psql-claim.yaml

> persistentvolumeclaim/postgres-volume-claim2 created

kubectl get pvc

> NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

> postgres-volume-claim2 Bound postgres-volume2 1Gi RWX manual <unset> 11s

- Check Claim is Bound to Persistent Storage

kubectl get pv

> NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

> postgres-volume2 1Gi RWX Retain Bound ocean2/postgres-volume-claim2 manual <unset> 2m24s- Create Deployment

kubectl apply -f ps-deployment.yaml

> deployment.apps/postgres created

kubectl get deployments

> NAME READY UP-TO-DATE AVAILABLE AGE

> postgres 1/1 1 1 8s

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> postgres-758bd48d5d-j5h9x 1/1 Running 0 37sI want to be able to reach the db using dbeaver, so i will expose it to the internet using a Load Balancer service.

- Create Service

kubectl apply -f ps-service.yaml

> service/postgres created

kubectl get svc

> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

> postgres LoadBalancer 100.109.88.254 afa0152a7eb1944c594aa6734fb4d44b-779836742.us-east-1.elb.amazonaws.com 5432:30139/TCP 11s

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> postgres-758bd48d5d-gj9pg 1/1 Running 0 5m36s5.2 Data

Alright, we have our pg server up and running. Now we need some data.

I already have a database that is running locally and I want to use that. Let's create a backup file. We will use that to create a db on the kyma side.

I am using dbeaver and I will use that to create the backup file.

Then we will use the backup file to create a database on kyma.

- Copy Database Backup to Kyma PostgreSQL

kubectl cp ocean_backup.sql postgres-758bd48d5d-gj9pg:/tmp/ocean_backup.sql

- List Pods and Get the PostgreSQL Pod Name

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> postgres-679f8fc7bf-9skjx 1/1 Running 0 171m

kubectl exec -it postgres-679f8fc7bf-9skjx -- psql -U postgres -d OceanO -f /tmp/ocean_backup.sql

- Here you will see a bunch of SQL Commands

- Get the running service for PostgreSQL

kubectl get svc

> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

> postgres LoadBalancer 100.109.88.254 afa0152a7eb1944c594aa6734fb4d44b-779836742.us-east-1.elb.amazonaws.com 5432:30139/TCP 177mUnder EXTERNAL-IP, you can see the external ip for the load balancer. On port 5432, we can reach our PostgreSQL Server. Let's create a connection to that on our dbeaver.

Now lets restart the pg deployment.

If the persistent volume is setup correctly, after we restart the deployment, we should still be able to reach the database.

- Restart the deployment

kubectl rollout restart deployment postgres

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> postgres-db67c9b56-6xqds 1/1 Running 0 15s

- Notice the name of the pod is changed.Let's see if we can still access the database.

Looks like we can.

This concludes our setup for the database.

Next, we are deploying the backend.

6. Backend

Our backend is a .Net Application.

These are steps that we will follow:

- Create a docker image of the project.

- Push the docker image to docker hub.

- Create deployment on Kyma.

- Create a service on Kyma.

- Create an ingress service on Kyma.

6.1 Create Docker Image

Here is the dockerfile for the backend:

FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build

WORKDIR /source

COPY . .

WORKDIR /source/WebAPI

RUN dotnet publish -c release -o /app

FROM mcr.microsoft.com/dotnet/aspnet:8.0

WORKDIR /app

COPY --from=build /app ./

ENTRYPOINT ["dotnet", "WebAPI.dll"]

EXPOSE 5000It is very simple. Two stages, build and serve. Keep in mind the 'EXPOSE 5000'. It will be important later.

Now, let's build our image.

Go to your backend folder.

docker build -t <user-name>/ocean-be:latest .6.2 Push Docker Image To Docker Hub

If you remember, in the prerequisites of the tutorial, I said that we needed a dockerhub account. I wont include how to get one in this tutorial, I will assume that you have docker installed and you are logged in to dockerhub.

Let's push the image to dockerhub.

docker push <user-name>/ocean-be:latestDone and done.

6.3 Create Deployment

Now, like the PostgreSQL deployment step, we will use a deployment yaml file. In that file, we were using an already prepared docker image named postgres:14.

Here in our deployment file for the backend, we will use the newly pushed docker image.

apiVersion: apps/v1

kind: Deployment

metadata:

name: ocean-be

labels:

app: ocean-be

version: "1"

spec:

replicas: 1

selector:

matchLabels:

app: ocean-be

version: "1"

template:

metadata:

labels:

app: ocean-be

version: "1"

spec:

containers:

- name: ocean-be

image: <user-name>/ocean-be:latest

imagePullPolicy: Always

resources:

requests:

cpu: "0.25"

memory: "256Mi"

limits:

cpu: "1"

memory: "512Mi"

ports:

- name: http-traffic

containerPort: 80

protocol: TCP

env:

- name: ASPNETCORE_ENVIRONMENT

value: "Development"

- name: ASPNETCORE_HTTP_PORT

value: "5000"

- name: ASPNETCORE_URLS

value: "http://+:5000"

- name: DOTNET_PRINT_TELEMETRY_MESSAGE

value: "False"You can see that we are passing environment variables to the container.

On a .Net WebAPI project, we have multiple appsettings file and based on the environment, the application chooses what appsettings file to use. We are telling it that it is running in the development environment. I already updated the appsettings file for the development environment with the connection information of our new pg server. This is why I am telling it to use that environment. We are also telling it to use port 5000 for the backend. This is different from the EXPOSE 5000 line from the dockerfile. That was for the container. This is for the .Net application itself. Obviously, they should match.

You can create the deployment file anywhere you want. I create it at the root of the backend project itself, along with the service file, or you can have a completely separate folder for k8s deployments and run those files there. Your choice.

Create a deployment.yaml file, copy and paste the contents of the example file above into that file (and update if necessary) then run the following command:

- Create deployment

kubectl apply -f .\deployment.yaml

> deployment.apps/ocean-be created

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> ocean-be-77ddb8bf4f-8c7sg 1/1 Running 0 30s

> postgres-db67c9b56-6xqds 1/1 Running 0 11hOur backend deployment is up and running.

6.4 Create Service

Same with the PostgreSQL deployment, we will also need a service for our backend.

The file we will be using is this:

--------------------------------------------------

service.yaml

--------------------------------------------------

apiVersion: v1

kind: Service

metadata:

name: ocean-be

labels:

app: ocean-be

spec:

ports:

- name: http

port: 80 # Exposed port on the service (ClusterIP)

targetPort: 5000 # The port the application is listening on

selector:

app: ocean-beWe can apply the service with the following command:

- Create service

kubectl apply -f .\service.yaml

> service/ocean-be created

kubectl get svc

> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

> ocean-be ClusterIP 100.105.221.200 <none> 80/TCP 18s

> postgres LoadBalancer 100.109.88.254 afa0152a7eb1944c594aa6734fb4d44b-779836742.us-east-1.elb.amazonaws.com 5432:30139/TCP 17hPlease note that there is no EXTERNAL-IP for our ocean-be service. We will be creating an ingress service to be able to reach it.

6.5 Create Ingress Service

We will be using the ingress service for the backend, but we also will use it later for the frontend.

Right now, we will deploy an incomplete version of the ingress configuration. When we are deploying the frontend, we will update the config file.

The ingress service yaml file:

--------------------------------------------------

ingress.yaml

--------------------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ocean-ingress2

namespace: ocean2

labels:

app: ocean-ingress2

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /api

pathType: Prefix

backend:

service:

name: ocean-be

port:

number: 80I created yet another folder in the project root called services. And inside that, I created a file called ingress.yaml.

You can do the same or you can create this file anywhere you want. Once done, copy the contents above and run the following command:

- Create Ingress Service

kubectl apply -f ingress.yaml

> ingress.networking.k8s.io/ocean-ingress2 created

kubectl get ingress ocean-ingress2

> NAME CLASS HOSTS ADDRESS PORTS AGE

> ocean-ingress2 nginx * abb469b4569eb42b7a715fcfded963ff-1346055049.us-east-1.elb.amazonaws.com 80 40sAs last step, we can check if this address returns anything.

I know that /api/Language/GetAllLanguages will return me a json that contains some languages.

So in the browser, I can try calling that address.

Voila!

The backend is live. This also shows that backend is able to communicate with the db as that information is coming the database.

All that remains is the frontend, and then we will have a fullstack app with db and all, that is running on a k8s cluster on SAP BTP Kyma.

Exiting times!!!

7. Frontend

On the frontend, we are running Angular.

The same spiel as the backend. We will make a deployment, then a service and we will update our ingress. And before all that, we will prepare our docker image and push it to dockerhub.

- Create Docker Image

- Push Docker Image

- Create deployment

- Create Service

- Update Ingress Service

7.0 Project Configuration

On angular, we have a couple of config files that determine what is the url of the backend based on what environment the project is running on. Same as backend, we will assume that we are running in the development environment and update that file with ingress EXTERNAL-IP and /api.

INGRESS-EXTERNAL-IP/api

Eg. abb469b4569eb42b7a715fcfded963ff-1346055049.us-east-1.elb.amazonaws.com/api

{

"serviceUrl": "http://abb469b4569eb42b7a715fcfded963ff-1346055049.us-east-1.elb.amazonaws.com/api",

"buildNumber": "dev",

"version": "dev",

"environment": "DEV"

}7.1 Create Docker Image

The dockerfile for the frontend project.

FROM node:20-alpine AS build

ENV NODE_VERSION 20.17.0

ARG ENV=dev

WORKDIR /app

COPY . .

RUN npm install

RUN npm run build:$ENV

FROM nginx:alpine

ARG ENV=dev

RUN rm -rf /usr/share/nginx/html/*

COPY --from=build /app/dist/apollo-ng/ /usr/share/nginx/html

COPY ./nginx.conf /etc/nginx/conf.d/default.conf

EXPOSE 80The build command:

docker build -t <user-name>/ocean-fe:latest .7.2 Push Docker Image

Let's push the image to dockerhub

docker push umutakin/ocean-fe:latest7.3 Create Deployment

The deployment file:

--------------------------------------------------

deployment.yaml

--------------------------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: ocean-fe

labels:

app: ocean-fe

version: "1"

spec:

replicas: 1

selector:

matchLabels:

app: ocean-fe

version: "1"

template:

metadata:

labels:

app: ocean-fe

version: "1"

spec:

containers:

- name: ocean-fe

image: <user-name>/ocean-fe:latest

imagePullPolicy: Always

resources:

requests:

cpu: "0.25"

memory: "128Mi"

limits:

cpu: "0.5"

memory: "256Mi"

ports:

- name: http-traffic

containerPort: 80

protocol: TCP

Let's run this.

- Create Deployment

kubectl apply -f .\deployment.yaml

> deployment.apps/ocean-fe created

kubectl get pods

> NAME READY STATUS RESTARTS AGE

> ocean-be-77ddb8bf4f-8c7sg 1/1 Running 0 1h

> ocean-fe-7b6b9d669b-cxhmq 1/1 Running 0 16s

> postgres-db67c9b56-6xqds 1/1 Running 0 12hThe deployment is successful.

7.4 Create Service

Let's create our service.

The service file:

--------------------------------------------------

service.yaml

--------------------------------------------------

apiVersion: v1

kind: Service

metadata:

name: ocean-fe

labels:

app: ocean-fe

version: "1"

spec:

ports:

- name: http-traffic

targetPort: 80

port: 80

protocol: TCP

selector:

app: ocean-fe

version: "1"The command:

- Create Service

kubectl apply -f .\service.yaml

> service/ocean-fe created

kubectl get svc

> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

> ocean-be ClusterIP 100.105.221.200 <none> 80/TCP 88m

> ocean-fe ClusterIP 100.108.40.41 <none> 80/TCP 20s

> postgres LoadBalancer 100.109.88.254 afa0152a7eb1944c594aa6734fb4d44b-779836742.us-east-1.elb.amazonaws.com 5432:30139/TCP 18hAll our services are up.

Do not be alarmed that there is no external ip for ocean-fe.

Remember, we can reach the backend although there is no external ip for that too. After we update the ingress for the frontend, we will be able to reach it.

7.5 Update Ingress Service

This is the last step of the tutorial.

We will update the ingress service in order to make the frontend project reachable from the net.

The service file:

--------------------------------------------------

ingress.yaml

--------------------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ocean-ingress2

namespace: ocean2

annotations:

labels:

app: ocean-ingress2

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /api

pathType: Prefix

backend:

service:

name: ocean-be

port:

number: 80

- path: /

pathType: Prefix

backend:

service:

name: ocean-fe

port:

number: 80You can see that we added just a minor add on, only the path for root. This means that when we try to reach the root of the ingress service, it will redirect to our frontend. Likewise, for /api, it is redirecting to the backend.

Let's update the ingress service now.

kubectl apply -f ingress.yaml

> ingress.networking.k8s.io/ocean-ingress2 reconfigured

kubectl get ingress ocean-ingress2

> NAME CLASS HOSTS ADDRESS PORTS AGE

> ocean-ingress2 nginx * abb469b4569eb42b7a715fcfded963ff-1346055049.us-east-1.elb.amazonaws.com 80 94mNo problems with the ingress service.

Now, the moment of truth!!!

Go to the ingress address,

abb469b4569eb42b7a715fcfded963ff-1346055049.us-east-1.elb.amazonaws.com in my case.

And we are done.

CONCLUSION

Here is a summary of the steps we took.

First, we created an SAP BTP account, then created a Kyma Environment on it.

Second, we setup a PostgreSQL Server on said Kyma Environment.

Third, we deployed our backend and tested its connection with the database server.

And fourth and last, we deployed our frontend and login to our app, guaranteeing that all the systems are communicationg with each other.

At the end of the tutorial, we have a fullstack app with db and all, that is running on SAP BTP Kyma Kubernetes Environment.

Hope you guys learned a lot and I really hope that this tutorial is useful to you in your projects.

Balance in all things.